Proxmox

Proxmox Virtual Environment (VE) is an open source virtualization management software that allows deployment and management of KVM-based virtual machines and LXC containers. The platform itself run on a Debian based distribution and supports filesystems such as ZFS and Ceph.

In terms of functionality, Proxmox VE is similar to VMware ESXi with some overlap with vSphere. You can have a cluster of Proxmox servers controlled and managed through a singular web console, command line tools, or via the provided REST API.

Installation

Installation is as simple as installing any other Linux distribution. Download the Proxmox iso image from https://www.proxmox.com/en/downloads. After installation, you should be able to access the web console via HTTPS on port 8006.

Tasks and How-Tos

Remove the subscription nag

If your Proxmox server has no active subscription, you will be nagged every time you log in to the web interface. This can be disabled by running the following as root in the server's console:

# sed -Ezi.bak "s/(Ext.Msg.show\(\{\s+title: gettext\('No valid sub)/void\(\{ \/\/\1/g" /usr/share/javascript/proxmox-widget-toolkit/proxmoxlib.js

You will have to do this after updating Proxmox again.

There is also an ansible role which does this at: https://github.com/FuzzyMistborn/ansible-role-proxmox-nag-removal/blob/master/tasks/remove-nag.yml

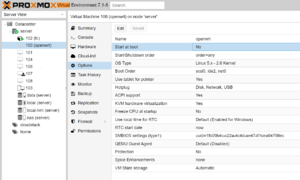

Make a VM or LXC start automatically at boot

You can enable automatic startup and the startup order for VMs and LXCs under the 'Options' panel.

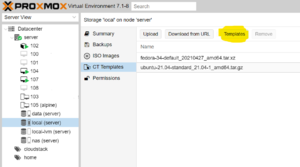

Adding additional LXC templates

Proxmox officially supports about a dozen different Linux distributions and provides up to date LXC templates through their repositories. These LXC templates can be downloaded using the pveam tool or through the Proxmox web interface.

Adding templates using the web interface

Go to your local storage and click on CT Templates. Click on the Templates button to see available templates.

Adding templates using the pveam utility

The Proxmox VE Appliance Manager (pvadm) tool is available to manage container templates from Proxmox's repository. More information at https://pve.proxmox.com/pve-docs/pveam.1.html.

Run pveam available to list all available templates, then run pveadm download $storage $template to download a template to a storage pool. For example:

root@server:~# pveam available

mail proxmox-mailgateway-6.4-standard_6.4-1_amd64.tar.gz

mail proxmox-mailgateway-7.0-standard_7.0-1_amd64.tar.gz

system almalinux-8-default_20210928_amd64.tar.xz

system alpine-3.12-default_20200823_amd64.tar.xz

system alpine-3.13-default_20210419_amd64.tar.xz

system alpine-3.14-default_20210623_amd64.tar.xz

system alpine-3.15-default_20211202_amd64.tar.xz

system archlinux-base_20210420-1_amd64.tar.gz

root@server:~# pveam download local fedora-35-default_20211111_amd64.tar.xz

downloading http://download.proxmox.com/images/system/fedora-35-default_20211111_amd64.tar.xz to /var/lib/vz/template/cache/fedora-35-default_20211111_amd64.tar.xz

--2021-12-29 15:17:28-- http://download.proxmox.com/images/system/fedora-35-default_20211111_amd64.tar.xz

Resolving download.proxmox.com (download.proxmox.com)... 144.217.225.162, 2607:5300:203:7dc2::162

Connecting to download.proxmox.com (download.proxmox.com)|144.217.225.162|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 89702020 (86M) [application/octet-stream]

Saving to: '/var/lib/vz/template/cache/fedora-35-default_20211111_amd64.tar.xz.tmp.1053836'

0K ........ ........ ........ ........ 37% 2.59M 21s

32768K ........ ........ ........ ........ 74% 13.6M 5s

65536K ........ ........ ..... 100% 20.5M=16s

2021-12-29 15:17:44 (5.42 MB/s) - '/var/lib/vz/template/cache/fedora-35-default_20211111_amd64.tar.xz.tmp.1053836' saved [89702020/89702020]

calculating checksum...OK, checksum verified

download of 'http://download.proxmox.com/images/system/fedora-35-default_20211111_amd64.tar.xz' to '/var/lib/vz/template/cache/fedora-35-default_20211111_amd64.tar.xz' finished

Using Terraform with Proxmox

You can use Terraform to manage VMs on Proxmox. More information on how to set this up on the Terraform page. Setup is relatively straight forward and the Terraform plugin for Proxmox is feature rich.

On a related note, you can also use Packer to build VM images. The Packer-Proxmox plugin supports keyboard autotyping which makes automatically building VMs all the more simple.

Install SSL certificates

Out of the box, Proxmox will generate a self signed SSL certificate for its management interfaces. This is sufficient from a security standpoint but will result in a SSL certificate warning every time you connect. You may wish to install a signed certificate from a trusted CA to correct this issue.

Note: Changing the SSL certificates prevented my SPICE clients from connecting. I had to revert back to the self generated certificates for them to work again. If you are using SPICE for remote desktkop, you may need to do further testing before rolling it out into production.

To install a new set of SSL certificates, log in to Proxmox via SSH and copy your SSL private key and certificates to /etc/pve/nodes/$node/pve-ssl.{pem,key}. Replace '$node' with the hostname of your Proxmox machine:

- The

pve-ssl.pemfile should contain your full certificate chain. This can be generated by concatenating your primary certificate, followed by any intermediate certificates. - The

pve-ssl.keyfile should contain your private key without a password.

Restart the pveproxy service to apply. If you have any problems, check the service's status with systemctl status pveproxy.

# cp fullchain.pem /etc/pve/nodes/<node>/pve-ssl.pem

# cp private-key.pem /etc/pve/nodes/<node>/pve-ssl.key

# systemctl restart pveproxy

Reduce ZFS ARC memory requirement

By default, ZFS's ARC uses 50% of the host's memory. If you are running VMs using a ZFS storage pool that exceeds 50% of your system's available memory, you will need to tweak the amount of memory assigned to ARC to prevent out of memory issues.

The minimum memory assigned to ARC as per Proxmox's documentation is 2GB + 1GB per TB of storage.

To change the ARC max size to 3 GB: echo "$[3 * 1024*1024*1024]" > /sys/module/zfs/parameters/zfs_arc_max

To make this permanent, edit /etc/modprobe.d/zfs.conf and add options zfs zfs_arc_max=3221225472

Enable QEMU VNC server on a guest VM

There are a few solutions when you want to remote desktop into a VM such as running a RDP/VNC server within the VM or leverage the SPICE display/connection option built into Proxmox. These have their own advantages and disadvantages.

I have recently started using the built-in VNC server that can be enabled on the KVM/QEMU config. The benefit with this approach is that you can control the VM during the boot process as you're seeing and controlling the VM as you would in the Proxmox web interface.

To enable VNC on a particular VM:

- Edit

/etc/pve/local/qemu-server/<vmid>.confand the followingargsline to enable the VNC server on port 5901 (The port here is the VNC display port and you typically just add 5900 to it. Therefore, port 1 is really TCP port 5901). You may change this port number if you have other VNC servers running.args: -vnc 0.0.0.0:1,password=on - In the same .conf file, add the following line to set the VNC password when the VM starts up.

hookscript: local:snippets/set_vnc_password.sh - Create the snippet by creating a file at

/var/lib/vz/snippets/set_vnc_password.sh. Put the following in the script. Note the VNC password is set in plain text here.#!/bin/bash if [[ "$2" != "post-start" ]] ; then echo "Not at post-start stage. Skipping" exit 0 fi VM_ID=$1 VNC_PASSWORD=myvncpassword expect -c " set timeout 5 spawn qm monitor $VM_ID expect \"qm>\" send \"set_password vnc $VNC_PASSWORD -d vnc2\r\" expect \"qm>\" send \"exit\r\" exit " echo echo "VNC password set."

- Test this by booting the VM. You should be able to connect to the Proxmox server on port 5901 using VNC and authenticate using the password set in the script.

VNC server listening on a local socket

Alternatively, you may have the VNC server listen on a local socket. To do so, adjust the args with the following:

## Listen on a socket (worked for me)

args: -object secret,id=vncpass,data=password -vnc unix:/var/run/qemu-server/$vmid-secondary.vnc,password-secret=vncpass

You may need to use the same snippet script above to set the password since libvirt/Proxmox may set a random passphrase on the VNC server.

With the VNC server running and listening on a socket, you may expose the socket as a TCP port using something like socat:

# socat tcp4-listen:5915,fork,reuseaddr unix-connect:/var/run/qemu-server/115-secondary.vnc

Attach a disk from another VM

To attach a disk to another VM, you must first detach the disk from the source VM. To do so, navigate to the VM's hardware panel, select the disk to detach, then click on 'Disk Actions' and then 'Detach'. Then, go to 'Disk Action' -> 'Reassign Disk' and select the destination VM.

The manual process

Note: Not recommended now. This was done before the reassign disk was a feature. These steps are here for legacy reasons.

If you are using ZFS for the VM disks, you can attach a disk from the source VM to another target VM by renaming the disk's VM ID to the target VM. You can run zfs list on the Proxmox server to see what disks are available. Once the disk is renamed, the disk can be made visible as an unused disk by running qm rescan to rescan and update the VM configs. From the Proxmox console, you should then be able to attach the unused disk under the hardware page.

For example, to attach a VM disk which was originally connected to VM 101 to VM 102, I had to rename the disk vm-101-disk-0 to vm-102-disk-1 by running: zfs rename data/vm-101-disk-0 data/vm-102-disk-1.

Renumber VM IDs

Use the following script to renumber a VM's ID. This script supports VMs that are stored on both LVM and ZFS. Power off the VM before proceeding. Proxmox should be able to see the VM being renamed automatically.

Be aware that renumbering a VM may cause Cloud-Init to re-run again as it may think it's on a new instance. This could have unintended side effects such as having your system's SSH host keys wiped and accounts' passwords reset.

#!/bin/bash

Old="$1"

New="$2"

usage(){

echo "Usage: $0 old-vmid new-vmid"

exit 2

}

if [ -z "$Old" ] || ! expr "$Old" : '^[0-9]\+$' >/dev/null ; then

echo "Error: Invalid old-vimd"

usage

if [ ! -f /etc/pve/qemu-server/$Old.conf ] ; then

echo "Error: $Old is not a valid VM ID"

exit 1

fi

fi

if [ -z "$New" ] || ! expr "$New" : '^[0-9]\+$' >/dev/null ; then

echo "Error: Invalid new-vimd"

usage

fi

echo "Found these disks in the config:"

cat /etc/pve/qemu-server/$Old.conf | grep -i -- vm-$Old- | awk -F, '{print $1}' | awk '{print $2}'

echo "Found these disks on LVM:"

lvs --noheadings -o lv_name,vg_name | grep "vm-$Old-"

echo "Found these disks on ZFS:"

zfs list | grep "vm-$Old-" | awk '{print $1}'

echo "Will execute the following:"

echo

lvs --noheadings -o lv_name,vg_name | grep "vm-$Old-" | while read lv vg ; do

echo lvrename $vg/$lv $vg/$(echo $lv | sed "s/$Old/$New/g")

done

zfs list | grep "vm-$Old-" | awk '{print $1}' | while read i ; do

echo zfs rename $i $(echo $i | sed "s/$Old/$New/g")

done

echo sed -i "s/$Old/$New/g" /etc/pve/qemu-server/$Old.conf

echo mv /etc/pve/qemu-server/$Old.conf /etc/pve/qemu-server/$New.conf

echo

read -p "Proceed with the rename? (y/n) " answer

case $answer in

[yY]* ) echo "Proceeding...";;

* ) echo "Aborting..."; exit;;

esac

echo "Task: Rename LVS data volumes"

lvs --noheadings -o lv_name,vg_name | grep "vm-$Old-" | while read lv vg ; do

lvrename $vg/$lv $vg/$(echo $lv | sed "s/$Old/$New/g")

done

echo "Task: Rename ZFS datasets"

zfs list | grep "vm-$Old-" | awk '{print $1}' | while read i ; do

zfs rename $i $(echo $i | sed "s/$Old/$New/g")

done

echo "Renumbering IDs"

sed -i "s/$Old/$New/g" /etc/pve/qemu-server/$Old.conf

mv /etc/pve/qemu-server/$Old.conf /etc/pve/qemu-server/$New.conf

Remove a single node cluster

If you created a single node cluster and want to undo it, run:

# systemctl stop pve-cluster corosync

# pmxcfs -l

# rm /etc/corosync/*

# rm /etc/pve/corosync.conf

# killall pmxcfs

# systemctl start pve-cluster

This was copied from: https://forum.proxmox.com/threads/proxmox-ve-6-removing-cluster-configuration.56259/

Run Docker in LXC

Docker works in Proxmox LXC but only if the nesting option is enabled. Docker works on either privileged LXC or unprivileged LXC but each has their own caveats:

| Feature | Privileged | Unprivileged |

|---|---|---|

| NFS | Can mount NFS; requires nfs=1 enabled in LXC configuration | Cannot mount NFS unless you use a bind mountpoint |

| Security | UIDs are not mapped; may be dangerous | UIDs/GIDs are mapped |

| OverlayFS | ZFS backing filesystem for /var/lib/docker should have no issues.

|

/var/lib/docker should use EXT or XFS (or some non-ZFS filesystem, more on this below.)

|

It's recommended that you do not run Docker in LXC unless you have a good reason such as: a) hardware passthrough on systems that cannot support it through VMs, or b) tight memory constraints where containerized workloads benefit from shared memory.

Issues with /var/lib/docker on a ZFS backed storage

Docker uses overlayfs to handle the container layers. On ZFS backed storage, it requires additional privileges to allow it to work properly. For LXC that are unprivileged, certain docker image pulls may fail if the image data is stored on ZFS storage. To work around this issue, you will have to use a different filesystem for /var/lib/docker by making a zvol and then formatting it with a different filesystem (see https://du.nkel.dev/blog/2021-03-25_proxmox_docker/). Alternatively, put the /var/lib/docker volume on a non-ZFS backed storage such as a LVM backed storage.

This issue manifests itself as issues when pulling some (but not all) container images with errors similar to:

[root@nixos:~]# docker-compose pull

[+] Pulling 8/10

⠋ pihole 9 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿] 23.91MB/23.91MB Pulling

✔ f03b40093957 Pull complete

✔ 8063479210c7 Pull complete

✔ 4f4fb700ef54 Pull complete

✔ 061a6a1d9010 Pull complete

✔ 8b1e64a56394 Pull complete

✔ 8dabcf07e578 Pull complete

⠿ bdec3efaf98a Extracting [==================================================>] 23.91MB/23.91MB

✔ 40cba0bade6e Download complete

✔ 9b797b6be3f3 Download complete

failed to register layer: ApplyLayer exit status 1 stdout: stderr: unlinkat /var/cache/apt/archives: invalid argument

Switching /var/lib/docker to a ext backed filesystem fixed the issue. This can be done with the following steps:

- Create the zvol:

zfs create -V 8G rpool/data/ctvol-111-disk-1 - Format the zvol:

mkfs.ext4 /dev/zvol/rpool/data/ctvol-111-disk-1 - Edit the LXC config under

/etc/pve/nodes/pve/lxc/111.confand add the following mountpoint config:mp0: /dev/zvol/rpool/data/ctvol-111-disk-1,mp=/var/lib/docker

Install NVIDIA driver in Proxmox

The NVIDIA Linux driver requires the following dependencies:

# apt install gcc make pve-headers

As part of the installation, the driver will ensure nouveau is disabled. It'll blacklist the nouveau kernel module for you but you will have to reboot (or rmmod it) before running the installer again.

Once you have the NVIDIA drivers installed, to hardware accelerate your VM:

- Set the graphics card of the VM to VirGL GPU

- Install the needed dependencies:

apt install libgl1 libegl1

Setup LXC with NVIDIA GPU

You can share your NVIDIA GPU with one or more LXC in Proxmox. This is possible only if the following conditions are met:

- NVIDIA drivers are installed on Proxmox.

# wget https://us.download.nvidia.com/XFree86/Linux-x86_64/535.54.03/NVIDIA-Linux-x86_64-535.54.03.run # chmod 755 NVIDIA-Linux-x86_64-535.54.03.run # ./NVIDIA-Linux-x86_64-535.54.03.run

- The exact same NVIDIA drivers are installed in the LXC. (Basically, run the same thing as above. If you're using NixOS, you might have to do some overrides or match the drivers on Proxmox to what was pulled in when you do nixos-rebuild switch).

- The NVIDIA devices is passed through to the LXC using the

lxc.mount.entrysetting. Do a listing of all the/dev/nvidia*devices and ensure that you catch all the device numbers.lxc.cgroup2.devices.allow: c 226:* rwm lxc.cgroup2.devices.allow: c 195:* rwm lxc.cgroup2.devices.allow: c 508:* rwm

- LXC is allowed to read the NVIDIA devices using the

lxc.cgroups2.devices.allowsetting. Ensure that you capture all the/dev/nvidia*devices that's appropriate for your card.lxc.mount.entry: /dev/dri/card1 dev/dri/card0 none bind,optional,create=file lxc.mount.entry: /dev/dri/renderD129 dev/dri/renderD128 none bind,optional,create=file lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps dev/nvidia-caps none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

- Start the LXC. You should be able to run '

nvidia-smi' from inside the container and have it see the status of the card. If it doesn't, doublecheck everything above: ensure your driver version numbers are matching (it would tell you if it didn't) and that you have the appropriate device numbers allowed.

How to set up Jellyfin on a Ubuntu based LXC

I used to run Jellyfin under a Docker VM with a NVIDIA GPU using PCIe passthrough, but I wanted to try running jellyfin on another machine which doesn't support iommu. To work around this, I attempted to run Jellyfin within a LXC.

What I did:

- Create a LXC as a privileged container. Two reasons for this: 1) The device passthrough sort of needs it and 2) I need to mount my Jellyfin media files via NFS

- Set up LXC with a Ubuntu image. Install Jellyfin as per the https://jellyfin.org/docs/general/installation/linux/#ubuntu instructions. Additionally, install the

jellyfin-ffmpeg5 libnvidia-decode-525 libnvidia-encode-525 nvidia-utils-525packages for GPU support. I used version 525 as that's the most recent stable version offered by NVIDIA. Note that because we're using these pre-packaged binaries, the driver you install on the PVE must match the exact version. - Look at the specific version of the nvidia packages that was installed in the previous step. You can also do a apt search libnvidia-encode to see what versions are available. Download and install the NVIDIA drivers on the Proxmox server itself while ensuring you get the exact version that matches the packages you installed in the LXC environment.

- Manually edit the LXC config under /etc/pve/lxc/<id>.conf and add: /dev/dri/card1 and /dev/dri/renderD129 are the devices that appeared after installing the NVIDIA drivers on the PVE which made it obvious that they were what I wanted. If you're unsure, you could try unloading the Nvidia driver and see what disappears.

lxc.cgroup2.devices.allow: c 226:* rwm lxc.cgroup2.devices.allow: c 195:* rwm lxc.mount.entry: /dev/dri/card1 dev/dri/card0 none bind,optional,create=file lxc.mount.entry: /dev/dri/renderD129 dev/dri/renderD128 none bind,optional,create=file lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps dev/nvidia-caps none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

- Restart the LXC environment and see if nvidia-smi works. If it doesn't, ensure your version numbers are matching (it would tell you if it didn't) and that you have the appropriate device bind mounted into the LXC.

- Continue with the jellyfin installation as usual. Run:

wget -O- https://repo.jellyfin.org/install-debuntu.sh | sudo bash.

How to run Docker on a NixOS based LXC

I later moved Jellyfin to NixOS running docker in LXC. This was done to simplify the Jellyfin update process and to leverage my existing docker-compose infrastructure. The NixOS LXC is privileged with nesting enabled (as both are required by Docker).

Here is the NixOS config to get Docker installed and with the NVIDIA drivers and runtime set up:

virtualisation.docker = {

enable = true;

enableNvidia = true;

};

# Make sure opengl is enabled

hardware.opengl = {

enable = true;

driSupport = true;

driSupport32Bit = true;

};

# NVIDIA drivers are unfree.

nixpkgs.config.allowUnfreePredicate = pkg:

builtins.elem (lib.getName pkg) [

"nvidia-x11"

"nvidia-settings"

"nvidia-persistenced"

];

# Tell Xorg to use the nvidia driver

services.xserver.videoDrivers = ["nvidia"];

hardware.nvidia = {

package = config.boot.kernelPackages.nvidiaPackages.production;

};

After doing a NixOS rebuild, docker works and docker containers with the nvidia runtime also works.

Troubleshooting NVIDIA LXC issues

Missing nvidia-uvm

You also need the cuda device nvidia-uvm passed through.

Symptoms of having this device missing are the ffmpeg bundled with Jellyfin with cuda support will fail with this error message:

[AVHWDeviceContext @ 0x55eea5d88f80] cu->cuInit(0) failed -> CUDA_ERROR_UNKNOWN: unknown error

Device creation failed: -542398533.

Failed to set value 'cuda=cu:0' for option 'init_hw_device': Generic error in an external library

Error parsing global options: Generic error in an external library

If the device isn't missing but something is misconfigured, strace would show the process attempting to read but failing:

openat(AT_FDCWD, "/dev/nvidia-uvm", O_RDWR|O_CLOEXEC) = -1 EPERM (Operation not permitted)

openat(AT_FDCWD, "/dev/nvidia-uvm", O_RDWR) = -1 EPERM (Operation not permitted)

The fix

If the device /dev/nvidia-uvm and /dev/nvidia-uvm-tools isn't available on the PVE (ie. it's missing), then you will have to run this:

## Get the device number

# grep nvidia-uvm /proc/devices | awk '{print $1}'

## Then create the device node using the device number above (508 for me, but this can change!)

# mknod -m 666 /dev/nvidia-uvm c 508 0

# mknod -m 666 /dev/nvidia-uvm-tools c 508 0

See also: https://old.reddit.com/r/qnap/comments/s7bbv6/fix_for_missing_nvidiauvm_device_devnvidiauvm/

If you're using LXC, you also have to edit the LXC config file (at /etc/pve/nodes/pve/lxc/###.conf) and add the following. Adjust the device numbers as needed.

lxc.cgroup2.devices.allow: c 226:* rwm

lxc.cgroup2.devices.allow: c 195:* rwm

lxc.cgroup2.devices.allow: c 510:* rwm

lxc.mount.entry: /dev/dri/card1 dev/dri/card0 none bind,optional,create=file

lxc.mount.entry: /dev/dri/renderD129 dev/dri/renderD128 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps dev/nvidia-caps none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

Randomly losing NVIDIA device access

Whenever you change a LXC setting (such as memory or CPU change), it will break access to the NVIDIA GPUs from within the LXC. Any existing processes with the NVIDIA GPU opened will continue to work, but any new processes trying to access the GPU will fail. The only fix is to restart the LXC.

Replace a failed ZFS mirror boot device

If you are using ZFS mirror as the OS boot device and need to replace one of the disks in the mirror, keep in mind the following:

- Each disk in the ZFS mirror has 3 partitions: 1. BIOS boot, 2. grub, 3. ZFS block device. When you replace a disk, you will have to recreate this partition scheme before you can replace the ZFS device using the 3rd partition.

- Grub is installed on the 2nd partition on all disks in the ZFS mirror. If you are replacing the disk that your BIOS uses to boot, you should still be able to boot Proxmox by booting off the other disks from the boot menu. After replacing the failed disk, you can reinstall the grub bootloader.

The steps to replace a failed disk in a ZFS mirror that's used as the boot device on Proxmox are:

- Remove the failed device and replace it with the new device

- Once you see the new device on your system (with lsblk), set up the partition table:

sgdisk <healthy bootable device> -R <new device> - Randomize the GUIDs

sgdisk -G <healthy bootable device> - Replace the failed device in your zpool. Check the status with

zpool statusand then replace the failed device withzpool replace -f <pool> <old zfs partition> <new zfs partition> - Reinstall the bootloader on the grub partition on the new device by formatting the partition:

proxmox-boot-tool format /dev/sda2, - Then reinstall grub:

proxmox-boot-tool init /dev/sda2

See Proxmox's sysadmin documentation on this topic at: https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot_proxmox_boot_setup

Migrating VMs from a Proxmox OS disk

I wanted to reinstall Proxmox on another disk on the same machine while preserving the VMs and containers. This required installing a clean copy of Proxmox on the new drive and then migrating all existing VMs and containers to the new install from the existing disk.

I was able to get this all done by:

- On the old install (while it's still running), Tar up

/etc/pveand/var/lib/rrdcached/dbfrom the old disk. You have to do this while the old version is running since the.conffiles seem to disappear when the system's off (I didn't investigate why due to time constraints). Restore it to the new Proxmox install. - Add the old disk back as storage on the new Proxmox install. You do not and should not need to format anything. For LVM storage, just add a LVM storage and select the appropriate device. Your VM disks should appear and you should then be able to migrate it / attach it to the VMs which you imported in the previous step.

Troubleshooting

LXC containers does not start networking

I have a Fedora based LXC container which takes a long time to start up. After the tty does start, the only network adapter isn't up. systemd-networkd is also in a a failed state with a bad exit code and status 226/namespace.

This was resolved by enabling nesting on this container with the following commands:

# pct set $CTID -features nesting=1

# pct reboot $CTID

PCIe passthrough fails due to platform RMRR

This is covered in more details at HP_DL380_page on PCIe_passthrough_failing. Basically, the workaround for this is to install a patched kernel that doesn't respect the RMRR restrictions.

If you want to build your own patched kernel, you might be interested in this repo: https://github.com/sarrchri/relax-intel-rmrr

NVIDIA driver crashes with KVM using VirGL GPU

On Proxmox 7.4 with NVIDIA driver version 535.54.03 installed, starting a virtual machine with a VirGL GPU device results in KVM segfaulting:

[Wed Jul 26 11:13:38 2023] kvm[559117]: segfault at 0 ip 00007ff13a39ca23 sp 00007ffd4eb76450 error 4 in libnvidia-eglcore.so.535.54.03[7ff1398bd000+164e000]

[Wed Jul 26 11:13:38 2023] Code: 48 8b 45 10 44 8b a0 04 0d 00 00 e9 44 fe ff ff 0f 1f 80 00 00 00 00 48 8b 77 08 48 89 df e8 e4 4d 78 ff 48 8b 7d 10 48 89 de <48> 8b 07 ff 90 98 01 00 00 48 8b 43 38 e9 f2 fd ff ff 0f 1f 00 48

A reboot of the Proxmox server did not help.

Fix: I re-installed the NVIDIA drivers but also answered 'yes' for the 32bit libraries to be installed. Perhaps that was the fix, or the act of re-installing the driver recompiled the driver on the current running kernel which fixed the issue.

Network interface reset: Detected Hardware Unit Hang

I have a machine with an Intel Corporation 82579V Gigabit network adapter which periodically stutters especially when the server is under heavy load (mainly I/O with some CPU) with the following kernel message being triggered:

[Fri Aug 18 00:05:03 2023] e1000e 0000:00:19.0 eno1: Detected Hardware Unit Hang:

TDH <1d>

TDT <77>

next_to_use <77>

next_to_clean <1c>

buffer_info[next_to_clean]:

time_stamp <105642dfd>

next_to_watch <1d>

jiffies <1056437b0>

next_to_watch.status <0>

MAC Status <40080083>

PHY Status <796d>

PHY 1000BASE-T Status <3800>

PHY Extended Status <3000>

PCI Status <10>

[Fri Aug 18 00:05:03 2023] e1000e 0000:00:19.0 eno1: Reset adapter unexpectedly

When this happens, all networking pauses temporarily and may cause connections to break.

Some online research suggests the issue could be with the e1000e driver having some sort of bug which in conjunction with TCP segmentation offloading (TSO) may cause the interface to hang. The workaround is to disable hardware TSO which you can do with ethool:

# ethtool -K eno1 tso off gso off

This change will revert on the next reboot. If it helps with your situation, you can make it persistent by editing /etc/network/interfaces with a post-up command:

auto eno1

iface eno1 inet static

address 10.1.2.2

netmask 255.255.252.0

gateway 10.1.1.1

post-up /sbin/ethtool -K eno1 tso off gso off

NVidia PCIe Bus Error

On my X99-E WS + Xeon E5-1660 + NVidia Quadro P400 machine running kernel 6.8.12-8-pve, which is currently my primary Proxmox box, I'm now seeing a never ending stream of correctable errors from the GPU. The GPU's plugged into the first slot. Kernel messages with these errors look like this:

root@pve:~# dmesg -T | tail

[Sat Aug 2 22:10:12 2025] pcieport 0000:00:03.0: AER: Correctable error message received from 0000:05:00.0

[Sat Aug 2 22:10:12 2025] nvidia 0000:05:00.0: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Receiver ID)

[Sat Aug 2 22:10:12 2025] nvidia 0000:05:00.0: device [10de:1cb3] error status/mask=00000001/0000a000

[Sat Aug 2 22:10:12 2025] nvidia 0000:05:00.0: [ 0] RxErr (First)

[Sat Aug 2 22:10:14 2025] pcieport 0000:00:03.0: AER: Correctable error message received from 0000:05:00.0

[Sat Aug 2 22:10:14 2025] nvidia 0000:05:00.0: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Receiver ID)

[Sat Aug 2 22:10:14 2025] nvidia 0000:05:00.0: device [10de:1cb3] error status/mask=00000001/0000a000

[Sat Aug 2 22:10:14 2025] nvidia 0000:05:00.0: [ 0] RxErr (First)

root@pve:~# lspci -nnn | grep 05:00

05:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP107GL [Quadro P400] [10de:1cb3] (rev a1)

05:00.1 Audio device [0403]: NVIDIA Corporation GP107GL High Definition Audio Controller [10de:0fb9] (rev a1)

One possible fix is to disable PCIe Active State Power Management by adding pcie_aspm=off to the kernel boot arguments in /etc/default/grub, then apply it to grub by running update-grub before rebooting.

A side note: adding acpi_enforce_resources=no to the kernel args and rebooting did not help. There are also some suggestions to add pci=noaer which will turn off error reporting. I did no try this after disabling ASPM.